In the first phase, information technology revolutionises biology. In the next phase, biology will revolutionise information technology. And that will totally, once again, revolutionise economies. Together these represent a turning point not just in economics, but in human history. (A. Toffler & H. Toffler)

We are now seeing the emergence of technologies that will take us into a post-digital era. The quantum world is not binary. It is a place where a particle behaves like a wave; where an electron spins in opposite directions at the same time; where information can be encoded as 1, 0 or both 1 and 0 simultaneously; where two particles can be both separated and yet entangled. (Battersby)

Introduction: The Tofflers and the Third Wave

Writing for the Asian Wall Street Journal at the beginning of the 2000s, the Tofflers, Alvin and Heidi, demonstrated their combined power of insight that made them the leading futurologists of the twentieth century. With characteristic analytical rigour and intuition, they signalled a new economy, indicating:

It is now clear that the entire digital revolution is only the first phase of an even larger, longer process. If you think the revolution is only, get ready to be shocked again as information technology fully converges with and is remade by the biological revolution. In the first phase, information technology revolutionises biology. In the next phase, biology will revolutionise information technology. And that will totally, once again, revolutionise economies. Together these represent a turning point not just in economics, but in human history.

Alvin Toffler died in 2016. His research focused on digital technologies, and he wrote three best-sellers, Future Shock(1970), The Third Wave (1980), Powershift (1990) and Creating a New Civilization (1995). The Third Wave was his description of postindustrial society, and his ideas had a profound impact on East Asian thinkers. Cowritten with Heidi Toffler, Creating a New Civilization was subtitled ‘The Politics of the Third Wave,’ representing an inevitably new world order that became a mantra for many in the 1990s, especially in Republican circles. It was then summed up in the idea that, in the Third Wave, it is knowledge rather than capital, land or labour that organises the economy. By ‘knowledge,’ they mean not only knowledge in its traditional sense such as research but also a variety of forms from ‘data, inferences, and assumptions, to values, imagination, and intuition.’ As one reviewer puts it:

Third Wave nations create and exploit this knowledge by marketing information, innovation, management, culture, advanced technology, software, education, training, medical care, and financial services to the world. De-massification characterises the Third Wave; once it has fully arrived, mass manufacturing, mass education, and mass media will no longer exist.

These ideas have now passed in the history of the discourse and knowledge development policies around the concept of the knowledge economy. It’s a discourse that underwent nine waves of discursive transformations of both left and right persuasion:

- knowledge society (postindustrial sociology),

- information economy,

- Post-Fordist flexible accumulation,

- endogenous growth theory,

- ‘building the knowledge economy,’

- innovation economy – learning and creative economies,

- information and networked society,

- open knowledge economy,

- biodigitalism.

The Third Wave is a stage theory that postulates the first wave as agricultural society, the second wave as industrial, and the third as postindustrial, often described as the information age, then the knowledge economy, followed by other epithets that focus on the significance of digital technologies; and more recently on a group of strategies technologies that emphasise the technological convergence among quantum technologies, AI technologies, complexity and systems science, genomic technologies, and nanotechnology. I have been particularly concerned in my research to examine the technological convergence referred to as ‘bioinformational’ and ‘biodigital’ that harnesses new biology and information science. A case in point concerns the convergence between quantum theory, first articulated in 1926, and information theory (an application of probability theory), developed by Claude Shannon single-handedly in 1948. The convergence sparked the ‘second quantum revolution’ based on the following:

- indeterminism

- interference

- uncertainty

- superposition

- entanglement.

So-called ‘quantum supremacy’ is not just about faster and more powerful computers but rather about solving complex problems beyond the capacity or design of classical computers. Quantum computers are built on qubits rather than ‘bits’; qubits are the basic information unit in quantum computing, the equivalent of ‘bits’ in classical computing, which is classified as binary and characterised in terms of the values’ 0’ or ‘1.’ In quantum computing, the qubit, the quantum bit, in addition to values of ‘0’ and ‘1,’ can also be represented by a superposition of multiple possible states. Unlike a classical binary bit that can be in only two possible positions, qubits ‘can represent a 0, a 1, or any proportion of 0 and 1 in the superposition of both states, with a certain probability of being a 0 and a certain probability of being a 1.’

While quantum computing is still at an early stage, it has developed a raft of applications in the finance market and climate change, and now there is an ecosystem of architects and developers taking shape with a stack of quantum hardware, control systems responsible for gate operations, quantum integration and error correction, software layer to implement algorithms, quantum-classical interface and companies using quantum computing to address real-life problems. The consulting firm BCG indicates that in 2018 60 investments worth approximately $700 million since 2012 took their place alongside ‘market-ready technologies such as blockchain (1,500 deals, $12 billion, not including cryptocurrencies) and AI (9,800 deals, $110 billion).’ The BCG report ‘The Next Decade in Quantum Computing – and How to Play’ indicates

A regional race is also developing, involving large publicly funded programs that are devoted to quantum technologies more broadly, including quantum communication and sensing as well as computing. China leads the pack with a $10 billion quantum program spanning the next five years, of which $3 billion is reserved for quantum computing. Europe is in the game ($1.1 billion of funding from the European Commission and European member states), as are individual countries in the region, most prominently the UK ($381 million in the UK National Quantum Technologies Programme). The US House of Representatives passed the National Quantum Initiative Act ($1.275 billion, complementing ongoing Department of Energy, Army Research Office, and National Science Foundation initiatives). Many other countries, notably Australia, Canada and Israel, are also very active.

The UK Government Science Office was among the very first to release a planning document that outlined potential impacts for the British economy. The Quantum Age: Technological Possibilities focuses on the key technologies under development and the specific applications of quantum clocks, quantum imaging, quantum sensing and measurement, quantum computing and simulation and quantum communications. The thrust of the report is how to engineer a convergence between the research-based and British industry to capitalise on competitive advantage. This is part of the UK National Quantum Technologies Programme (NQTP), which ‘is a £1 billion dynamic collaboration between industry, academia and government’ representing ‘the fission of a leading-edge science into transformative new products and services.’ Quantum technologies (QT) are seen as the ‘digital backbone and advanced manufacturing base’ of the UK’s ‘quantum enabled economy.’ With support for some 470 PhD students and fourteen fellows, the programme is seen to be a core ingredient of the UK’s development as a world science superpower. The current British PM Boris Johnson has indicated an increase of £15bn a year to £22bn by 2025, using public investment to trigger private sector involvement. Johnson wants to increase research spending from nearly £15bn a year to £22bn by 2025 to use public investment to trigger waves of private investment.

It is worth noting that EPCC, the UK’s largest supercomputing centre and part of the University of Edinburgh, provides HPC services, and exascale research is now over thirteen years old. Weiland and Parsons, in a retrospective of the EPCC Exascale journey, suggest: ‘By 2022, we will be ready to host the UK’s first Exascale supercomputer and hope to make this a reality for the UK’s science agency, UK Research & Innovation, by 2024.’ The article summarises investigating energy efficiency and programming language interoperability, application performance and I/O solutions.

The Quantum Age

The global competition would be won ‘by the countries that complete their Third Wave transformation with the least amount of domestic dislocation and unrest.’ This idea is not too far off the mark except, the Tofflers perceived the process as inevitable and a natural consequence of cultural evolution embedding the paradigm too deeply embedded in the US split politics of the time, ignoring the fundamental transformation of China and East Asia, with years of investment in strategic technologies and the possibilities for an emergent parallel techno-system to the West. In terms of supercomputing, the easiest and most simple measure, China now leads the US by 186 research centres to 123. Jack Dongarra, who specialises in ‘numerical algorithms in linear algebra, parallel computing, use of advanced-computer architectures, programming methodology, and tools for parallel computers,’ has been involved in evaluating the performance of supercomputers since 1993. The list is extensive and is updated twice a year.

‘The Quantum Age’ is now an accepted description for ‘a revolution led by our understanding of the very small,’ as Brian Clegg puts it:

Technologies based on quantum physics account for some 35 per cent of GDP, and quantum behaviour lies at the heart of every electronic device. Quantum biology explains how our ability to see, birds’ ability to navigate – and even plant photosynthesis relies directly on quantum effects.

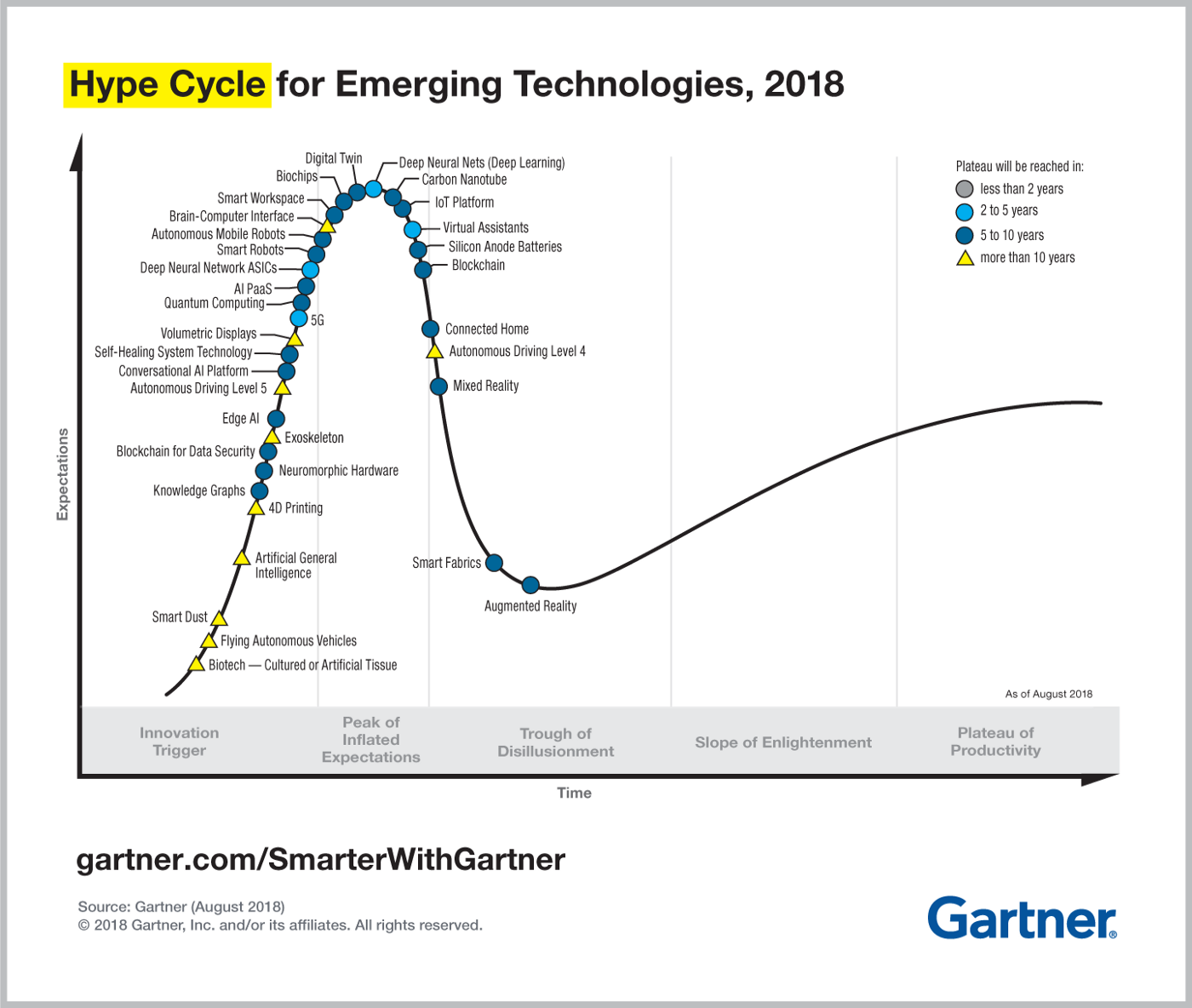

The Quantum Age is already touted as the next wave of technology. Chris Jay Hoofnagle & Simson L. Garfinkel put it, in their forthcoming book Law and Policy for the Quantum Age: ‘Quantum technologies are poised to change our lives. Nation-states are pouring billions into basic research. Companies operate secretive, moon-shot research labs seeking to build quantum computers. Militaries and intelligence agencies operate ambitious research projects to monitor adversaries.’ One website suggests that quantum computing is still finding its footing and provides a Hype Cycle for Emerging Technologies.

From https://miro.medium.com/max/1400/0*Wljo3rFRJjOt1Cra

Matt Swayne lists the ‘Top 18 Quantum Computing Research Institutions 2022,’ with access to significant papers, including: IBM, MIT, Harvard University, Max Planck Institute, University of California, Chinese Academy of Science, University of California, Berkeley, University of Maryland, Princeton University, Google PCRC, University of Tokyo, University of Science and Technology of China, University of Washington, University of Oxford, Duke University, National Institute of Standards and Technology, Stanford University, and California Institute of Technology. In terms of exascale systems, the US has 3 and China 10.

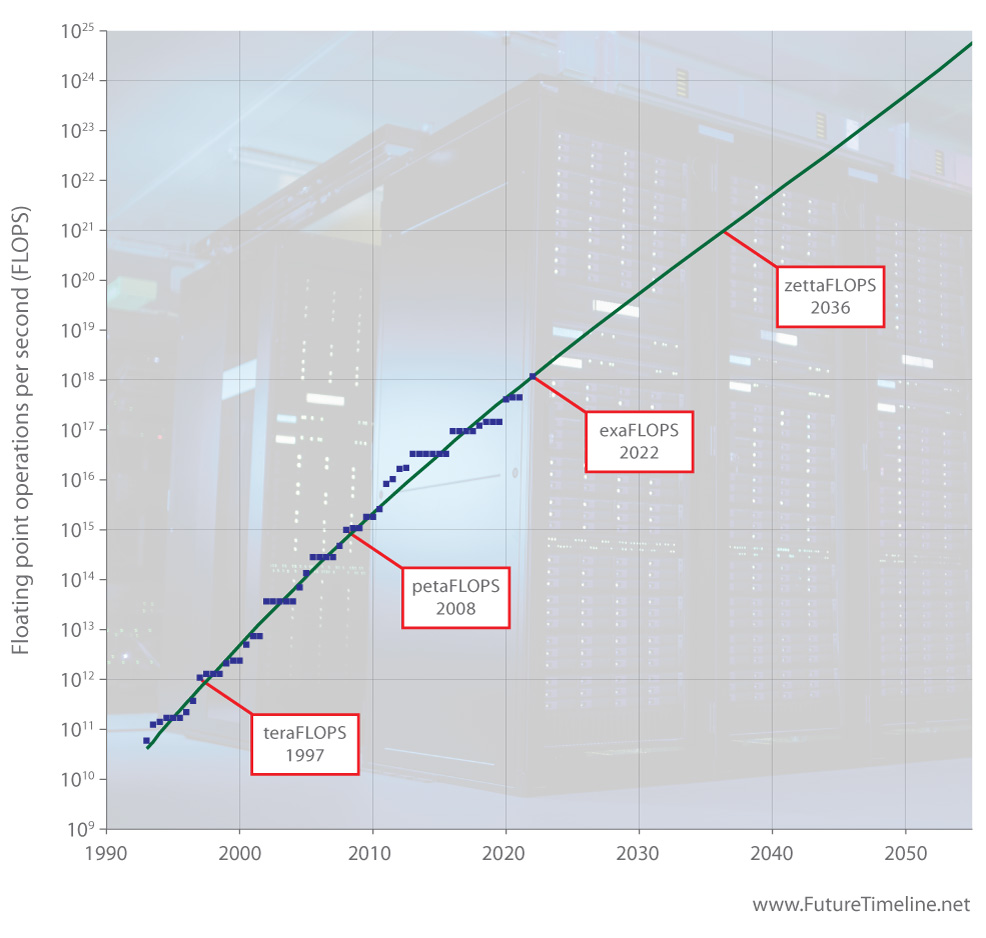

Exascale computing refers to computing systems capable of at least one exaflop or a billion billion calculations per second (1018). That is 50 times faster than the most powerful supercomputers being used today and represents a thousand-fold increase over the first petascale computer that came into operation in 2008.

Richard Walters of the Financial Times reports ‘US rushes to catch up with China in supercomputer race,’ and indicates:

The US is about to vault into a new era of supercomputing, with a once in a decade leap forward in processing power that will have a big effect on fields ranging from climate change research to nuclear weapons testing…. China passed this milestone first and is already well on the way to building an entire generation of advanced supercomputers beyond anything yet in use elsewhere…. China already had more supercomputers on the Top 500 list of the world’s most powerful computers than any other country – 186 compared with 123 in the US. Now, by beating the US to the next big breakthrough in the field and planning a spate of such machines, it is in a position to seize the high ground of computing for years to come. The Chinese breakthrough has come in the race to build so-called exascale supercomputers, systems that can handle 10 to the power of 18 calculations per second. That makes them a thousand times faster than the first of the petaflop systems that preceded them more than a decade ago.

The Tofflers got it right in terms of some of the important fundamentals, but the next 25 years have dramatically changed the context from Silicon Valley and the US to one of international competition with China in supercomputing and in other strategic digital technologies related to quantum technologies in general. A recent Congressional ‘Defence Primer: Quantum Technology’ notes that, while QT has not reached maturity, it holds ‘significant implications for the future of military sensing, encryption, and communications.’ Key concepts include superposition (‘the ability of quantum systems to exist in two or more states simultaneously’), quantum bits (qubits, a unit that uses superposition to encode) and entanglement, defined by NAS ‘as a property in which ‘two or more quantum objects in a system can be intrinsically linked such that measurement of one dictates the possible measurement outcomes for another, regardless of how far apart the two objects are’ (cited in ‘Defence Primer’). Various defence agencies have indicated that quantum sensing, quantum computers and quantum communications hold the most promise for DOD.

Back in 2012, John Preskill explored quantum information science at the ‘entanglement frontier,’ which classical systems cannot simulate easily. Preskill indicated that large-scale quantum computing needed to overcome the problem of decoherence, the loss of information from a system into the environment. The world’s first exascale computer has recently been confirmed: Oak Ridge National Laboratory announced that Frontier has demonstrated 1.1 Exaflop performance or 1.1 quintillion calculations a second. ORNL noted, ‘if each person on Earth completed one calculation per second, it would take more than four years to do what an exascale computer can do in one second.’

There are claims that China reached exascale first on two separate systems with speeds of 1.3 Exaflop/s on both the Oceanlite and Tianhe-3 systems. The Exascale Computing Project (ECP) presents the case for exascale computing:

To maintain leadership and to address future challenges in economic impact areas and threats to security, the United States is making a strategic move in HPC – a grand convergence of advances in codesign, modelling and simulation, data analytics, machine learning, and artificial intelligence. The success of this convergence hinges on achieving exascale, the next leap forward in computing.

The exponential increase in memory, storage, and compute power made possible by exascale systems will drive breakthroughs in energy production, storage, and transmission; materials science; additive manufacturing; chemical design; artificial intelligence and machine learning; cancer research and treatment; earthquake risk assessment; and many other areas.

From https://www.futuretimeline.net/blog/2022/05/30-supercomputer-future-timeline.htm

The graph indicates the technological progress and what is to come, and there are indications of other related developments concerning the quantum internet, the future of teleportation and the fault-tolerant universal quantum gate.

Chen Mo, Chief Solution Architect for Huawei, suggests that supercomputing is the next stage of technological evolution. China is focused on five supercomputing trends: diversified computing, all-optical networks, intensified data, containerised applications, and converged architecture with data-intensive supercomputing at the core presenting new challenges: ‘First, the amount of data involved in computing has increased dramatically’ with new types of data; ‘Second, computing power has increased dramatically’ enabling many concurrent tasks; ‘Third, higher reliability is required’ with higher requirements for storage; ‘Fourth, supercomputing centres and data centres need to converge’ as data mobility has become the biggest problem; ‘Mobilising stored data is the biggest challenge facing supercomputing centres.’

Data-intensive supercomputing serves as a data-centric, high-performance data analytics platform with the analytics capabilities of traditional supercomputing, big data analytics, and AI. It supports end-to-end scientific computing services through application-driven, unified data sources. It also provides diversified computing power for both research and business, and provides high-level data value services leveraging accumulated knowledge about data.

Excursus: The new paradigm of ‘nano-bio-info-cogno’ (NBIC) technologies

Supercomputing is seen as the future of genomics research, as Gabriel Broner pointed out over five years ago:

We are at an inflection point in the field of genomics. The cost of sequencing a human genome is less than $1,000 today, and expected to drop even further (compared to the $3 billion cost in 2003). With the cost of sequencing declining and the processes becoming more common, data is on the rise as a single human genome ‘run’ produces half a terabyte of raw data image files. These files are complex, with highly granular, unstructured scientific data that is difficult to manage and analyse.

Broner indicates the massive amount of unstructured genomics data being generated in academic, clinical, and pharmaceutical sites and the increasing demands for high-speed analytics with the flexibility to undertake complex genomic sequencing processes.

I have provided an account of technological convergence in terms of the new paradigm of ‘nano-bio-info-cogno’ (NBIC) technologies adopted by the US National Science Foundation that is expected to drive the next wave of scientific research, technology and knowledge economy, indicating: ‘The “deep convergence” representing a new technoscientific synergy is the product of long-term trends of “bioinformational capitalism” that harnesses the twin forces of information and genetic sciences that coalesce in the least mature ‘cognosciences’ in their application to education and research.’ I use the term ‘technopolitics,’ after Lyotard and Hottois, to examine the uses to which the new paradigm has been put in terms of human bodies and institutions.

The concept of bioinformational capitalism is a concept that I explored in an earlier paper, where, building on the separate literatures on ‘biocapitalism’ and ‘informationalism,’ I attempted to develop the concept of ‘bio-informational capitalism’:

to articulate an emergent form of capitalism that is self-renewing in the sense that it can change and renew the material basis for life and capital as well as program itself. Bio-informational capitalism applies and develops aspects of the new biology to informatics to create new organic forms of computing and self-reproducing memory that, in turn, has become the basis of bioinformatics.

NBIC technologies signal an emerging new synthesis where different technologies allow for a radical and progressive convergence at the nanolevel at an accelerating rate for the first time in human history with, for example, genetic engineering with DNA molecules, imaging with quantum dots, targeted drugs with nanoparticles, and biocompatible prosthesis with molecules by design. Biology as digital information, and digital information as biology, are now interrelated, and biodigitalism, along with bioinformation, anchored in nanoscience, emerges as a unified ecosystem that can address the constellation of forces shaping the future of human culture and human ontologies.

Biodigital Convergence, Biodigital Ethics and Indigenous Biopolitics

Against the defence or military application, Keith Williams and Suzanne Brant provide an indigenous view describing the intersection and merging of these technologies, such as using mRNA vaccines to treat COVID-19, ‘digitally controlled surveillance insects, [and] microorganisms genetically engineered to produce medicinal compounds.’ They explore the convergence from a Haudenosaunee philosophical perspective to suggest that insights concerning ‘relationality’ and ‘territory’ ‘may be necessary for humanity to adapt to the profound and existential changes implicit in the biodigital convergence.’ The contrast could not be plainer and certainly provides a useful argument for biodigital ethics and indigenous biopolitics. The authors respond to Policy Horizons Canada, which recently provided a scoping paper titled Exploring Biodigital Convergence. Policy Horizons Canada describes itself in the following terms:

We are a federal government organisation that conducts foresight. Our mandate is to help the Government of Canada develop future-oriented policy and programs that are more robust and resilient in the face of disruptive change on the horizon. To fulfil our mandate, we:

- Analyse the emerging policy landscape, the challenges that lie ahead, and the opportunities opening up.

- Engage in conversations with public servants and citizens about forward-looking research to inform their understanding and decision making.

- Build foresight literacy and capacity across the public service.

We produce content that may attract academic, public and international attention, and do not publish commentary on policy decisions of the Government.

In terms of governance, ‘Policy Horizons reports through the Deputy Minister of Employment and Social Development Canada (ESDC) to the Minister of Employment, Workforce Development and Disability Inclusion.’

Williams and Brant summarise the main claims of the Canadian scoping paper concerning digital convergence thus:

- The complete integration of biological and digital entities in which new, hybrid forms of life are created through the integration of digital technologies in living systems and the incorporation of biological components in digital technologies. Policy Horizons Canada (2020) draws on recent reports of the use of digitally controlled dragonflies and locusts for surveillance purposes and digital implants in humans, for medical purposes, to illustrate this category of biodigital convergence.

- The coevolution of biodigital technologies occurs when innovations in either biological systems or digital technologies lead to progress in the other domain that would have otherwise been impossible. Two examples include gene sequencing technologies paired with Artificial Intelligence leading to the engineering of biological organisms that can synthesise organic compounds in unusual ways and the CRISPR or Cas9 approach – a technology derived from bacteriophage genes and associated enzymes – facilitating gene editing in higher organisms.

- The conceptual convergence of biological and digital systems in which greater understandings of the theoretical underpinnings and mechanisms governing both biological and digital systems could lead to a paradigmatic shift in our understandings of these interacting systems. For example, the increasing recognition that complex digital technologies operate like biological systems has led to their description as technology ecosystems.

The idea of new ‘hybrid forms of life’ immediately flags some critical governmentality issues, and, while the scoping document refers to ‘a paradigmatic shift in our understandings of these [biodiogital] interacting systems,’ there is little philosophical overt appreciation as yet of the ethical and political issues. The paper begins with an indication that we face ‘another disruption of similar magnitude’ to the digital age: ‘Digital technologies and biological systems are beginning to combine and merge in ways that could be profoundly disruptive to our assumptions about society, the economy, and our bodies. We call this the biodigital convergence’ (emphasis given). Kristel Van der Elst, Director General of Policy Horizons Canada, in her Foreword embraces the magnitude of the future ‘disruption’ in expansive language and considers its ability to shape social and ethical discourse.

In the coming years, biodigital technologies could be woven into our lives in the way that digital technologies are now. Biological and digital systems are converging, and could change the way we work, live, and even evolve as a species. More than a technological change, this biodigital convergence may transform the way we understand ourselves and cause us to redefine what we consider human or natural.

Biodigital convergence may profoundly impact our economy, our ecosystems, and our society. Being prepared to support it, while managing its risks with care and sensitivity, will shape the way we navigate social and ethical considerations, as well as guide policy and governance conversations.

Williams & Brant respond directly to the axiological and ontological struggles that face a ‘deep state’ conception and argue for a default position that rests on ‘the collective benefits of humanity’:

many of our thoughts on the subject of biodigital convergence are both axiological and ontological in nature. How do we value our selves and other selves? Who are we, and does our personhood extend to include all our relations and the technologies that we use on a daily basis? Biodigital innovation is already happening, and there is certainly the potential for positive benefits to humanity, all our relations, and more broadly to Mother Earth.

Other critics are not so utopian. One source indicates that the ‘deep state’ is ‘not hiding their intentions anymore. The gene-editing CRISPR technology is already being promoted and implemented via the mRNA “vaccines,” the disastrous outcome of which is just beginning to emerge, although the media is not talking about it’ with some concerns about mail-order bioengineering or CRISPR kits, the ability to create lab-grown meat, and the reliance on data.

Writing nearly a decade ago, Kate O’Riordan expressed concern about ‘personal genomics and direct-to-consumer (DTC) genomics’: ‘The practices around the generation, circulation and reading of genome scans do not just raise questions about biomedical regulation, they also provide the focus for an exploration of how contemporary public participation in genomics works,’ especially where ‘genome sequences circulate as digital artefacts,’ digital genomic texts that promise empowerment, personalisation and community,’ offering ‘participation in biodigital culture’ but also may ‘obscure the compliance and proscription’ with biomedical regulation.

Biofeedback technologies in the 1960s provided principles to teach us how to control specific symptoms. Today we can‘measure factors of the body electric such as the voltage of the firing brain cells, the amperage of the heart muscle contractions, the voltage of the muscles and the resistance to the flow of electricity of the skin. We can measure the oscillations of these factors as seen by the EEG, ECG, EMG and GSR … CAT Scan, MRI, TENS, ultra-sound, and many other sophisticated biotechnologies are used routinely in our healthcare systems.’ And this is only the beginning, as scientists are discovering quantum effects play a fundamental role in biological processes such as enzymes, photosynthesis, and animal navigation. As Marais et al. indicate, ‘Developments in observational techniques have allowed us to study biological dynamics on increasingly small scales. Such studies have revealed evidence of quantum mechanical effects, which cannot be accounted for by classical physics, in a range of biological processes.’ They go on to write:

All living systems are made up of molecules, and fundamentally all molecules are described by quantum mechanics. Traditionally, however, the vast separation of scales between systems described by quantum mechanics and those studied in biology, as well as the seemingly different properties of inanimate and animate matter, has maintained some separation between the two bodies of knowledge. Recently, developments in experimental techniques such as ultrafast spectroscopy [6], single-molecule spectroscopy [7–11], time-resolved microscopy [12–14] and single-particle imaging [15–18] have enabled us to study biological dynamics on increasingly small length and time scales, revealing a variety of processes necessary for the function of the living system that depend on a delicate interplay between quantum and classical physical effects.

Problems arise not only for standard bioethics in relation to indigenous populations but also for subjectivity effects, in general, especially as they are promoted through the systems and networks of new media technologies. Ozum Hatipoglu provides us with ‘the biocybernetic revolution in theory and art’ in terms of ‘trans-media in ways that mirror quantum effects. She writes about how we might come to understand ‘bodies, subjectivities, identities, and sexualities’ in these terms:

We no longer exist in the realm of mechanical reproducibility; we now inhabit one in which biology and information theories unexpectedly combine to produce a new, simulacral form of technological reproduction in which bodies and organisms become affective codes, scripted texts, and discursive and non-discursive modes of communication flowing among various media networks and systems. This bioinformatic revolution signifies not only the conditions of new forms of biopolitics and its various regulatory and oppressive mechanisms determining, controlling, and constructing the actual life, the social reality or lived social relations that are shared across and between various networks and systems, but it also provides us with theoretical and practical tools that enable transgressive and subversive tactics, strategies, and approaches that transform the aesthetic stakes of everyday life by suggesting new ways of thinking about bodies, subjectivities, identities, and sexualities.

Hatipoglu is primarily concerned with the contemporary conception of systems and networks and the complex ways in which new media technologies transform the aesthetics of existence by opening new spaces of creation, disruption, subversion, and exploration’: ‘systems and networks as complex, dynamic, contingent, open, vital, heterogeneous, process-driven, non-linear, multiple and interactive entities.’

New media technologies emphasise the convergence of the biological and the technological, alluding to human being as a biotechnological entity made up of various networks and systems. Trans-networks correspond to continuously changing and transforming dynamic connections. The human being conceived of as a biotechnological hybrid questions the existence and presence of anything biological and natural. It subverts anything normalised within the identificatory regimes of the heteronormative matrix of sex and gender.

Postscript on Biodigital Philosophy

Much mainstream philosophy is premised on ontologies and epistemologies represented by the world of classical physics that operates on principles, criteria and standards that do not apply at the level of quantum mechanics. It is clear, for instance, that quantum mechanics impacts on notions of reality – whether naïve, structural, critical or bounded. It also calls into question standard objectivist, constructionist and subjectivist epistemologies. It seems, for instance, as physicist Sean Carroll puts it: ‘The fundamental nature of reality could be radically different from our familiar world of objects moving around in space and interacting with each other. We shouldn’t fool ourselves into mistaking the world as we experience it for the world as it really is.’ Elsewhere, Carroll defends ‘the extremist position that the fundamental ontology of the world consists of a vector in Hilbert space evolving according to the Schrödinger equation.’ One aspect of quantum mechanics that calls into question measurement and objectivity concerns the role of the observer. Proietti et al. make the point this way:

The observer’s role as final arbiter of universal facts was imperilled by the advent of 20th-century science. In relativity, previously absolute observations are now relative to moving reference frames; in quantum theory, all physical processes are continuous and deterministic, except for observations, which are proclaimed to be instantaneous and probabilistic. This fundamental conflict in quantum theory is known as the measurement problem, and it originates because the theory does not provide a precise cut between a process being a measurement or just another unitary physical interaction.

They suggest that ‘The scientific method relies on facts, established through repeated measurements and agreed upon universally, independently of who observed them. In quantum mechanics, the objectivity of observations is not so clear, most markedly exposed in Wigner’s eponymous thought experiment where two observers can experience seemingly different realities.’ The quantum measurement problem, understood as ‘the difficulty of reconciling the (unitary, deterministic) evolution of isolated systems and the (non-unitary, probabilistic) state update after a measurement,’ suggests that observation is relative to the observer. Bong et al., in a thought experiment, prove that ‘if quantum evolution is controllable on the scale of an observer, then’ the proposition ‘that every observed event exists absolutely, not relatively – must be false.’

Biodigital philosophy proceeds on the assumptions of quantum theory but also identifies and explores the possibilities of technological convergence, in particular, at the nanoscale between the digital and the biological. In this, it must map the histories and trajectories at the level of individual disciplines and fields of different technological convergences, and adopt a critical stand on the struggle over ontologies and, perhaps, most importantly, the ethical consequences of commercialising biodigital technologies. This means providing an understanding of the narratives of quantum theory for lay publics – those centring on superposition, entanglement and contextuality – and altogether more seemingly straightforward pragmatic approaches that focus on technological applications, rather than aesthetic conceptions or political ones. It is, also, given the speed of developments and their role about decisions in future security and economic development futures, important to map emergent tendencies, new applications and ‘cyber-physical architectures’ within which they become embedded. As Dixon et al. express it in discussing the future of bio-informational engineering:

The practices of synthetic biology are being integrated into ‘multiscale’ designs enabling two-way communication across organic and inorganic information substrates in biological, digital and cyber-physical system integrations. Novel applications of ‘bio-informational’ engineering will arise in environmental monitoring, precision agriculture, precision medicine and next-generation biomanufacturing. Potential developments include sentinel plants for environmental monitoring and autonomous bioreactors that respond to biosensor signalling. As bio-informational understanding progresses, both natural and engineered biological systems will need to be reimagined as cyber-physical architectures. We propose that a multiple length scale taxonomy will assist in rationalising and enabling this transformative development in engineering biology.

The National Science Foundation (NSF) indicates the stakes in its 2022 program for Semiconductor Synthetic Biology Circuits as semiconductor-based information technologies approaching its physical limits. With huge gains in supercomputing but severe limitations on storage, NSF has turned to the prospect of synthetic biology and ‘biomolecules as carriers of stored digital data for memory applications, in order to meet future information storage needs. As the NSF explains: ‘Understanding the principles of information processing, circuits, and communications in living cells could enable new generations of storage techniques.’ While there have been several promising breakthroughs, especially with low-energy biological systems that approach the thermodynamic limits, ‘sub-microscopic computing systems remain elusive.’ The emphasis is on ‘the sub-microscopic design challenges and offers new solutions for future nano and quantum systems for information processing and storage.’ The NSF SemiSynBio-III program ‘seeks to further explore and exploit synergies between synthetic biology and semiconductor technology’ with ‘the potential to lead to a new technological boom for information processing and storage.’ The goal of the SemiSynBio-III research program is directed at ‘novel high-payoff solutions for the information storage and information processing,’ as well as ‘fundamental research in synthetic biology integrated with semiconductor capabilities’ in the ‘design and fabrication of hybrid and complex biomaterial systems for extensive applications in biological communications, information processing technologies, sensing and personalised medicine.’ The technological convergence here is talked about in terms of the integration and application of advanced in synthetic biology that involves ‘hybrid living cell structures, cell-inspired and cell-based physical phenomenon to microelectronics and computational storage systems,’ which it is anticipated will lead to a ‘new generation of prototypical highly robust and scalable bio-storage systems inspired by mixed-signal electronic design for future computing applications.’

Future computing systems with ultra-low energy storage can be built on principles derived from organic systems that are at the intersection of biology, physics, chemistry, materials science, computer science and engineering. Next-generation information storage technologies can be envisioned that are driven by biological principles with use of biomaterials in the fabrication of devices and systems that can store data for more than 100 years with a storage capacity 1,000 times more than current storage technologies. Such a research effort can have a significant impact on the future of information storage technologies.

The NSF acknowledges there are ‘fundamental scientific issues and technological challenges associated with the underpinnings of synthetic biology integrated with semiconductor technology’ and indicate that this research ‘will foster interactions among various disciplines including biology, physics, chemistry, materials science, computer science and engineering that will enable in heretofore-unanticipated breakthroughs.’

This is a clear example of a program designed to provide a bioinformational breakthrough in an area of strategic significance for semiconductor technology and the future of the industry on which much the progress of future supercomputing depends. The development of organic or natural memory, storage and processing offers a new generation of biodigital technologies where ‘Biologically inspired technologies based on biomolecule-based modalities offer the potential for a dramatic increase in computing, sensing, and information storage capabilities.’ The NSF program comprises five research themes:

- Developing computational and experimental models of bio-molecular and cellular-based systems.

- Addressing fundamental research questions at the interface of biology and semiconductors.

- Designing sustainable bio-materials for novel bio-nano hybrid architectures and circuits that test the limits in transient electronics.

- Fabricating hybrid biological-semiconductor electronic systems with storage functionalities.

- Scaling-up and characterisation of integrated hybrid synthetic bio-electronic storage system

As the program notes specify, ‘Information processing plays a central role in enabling the functionality of biological systems, from the molecular to the ecological scale. Semiconductor technology has provided revolutionary tools and instrumentation for synthesis and sequencing of biomolecules’ with the goal of developing new methodologies and models that embrace ‘the complexity of multi-scale integrated bio-electronic systems.’

Biodigitalism is the heart of theoretically possible new breakthrough discoveries in bio-electronic systems and also the basis of biodigital convergence in DNA synthesis and shuffling, bioprospecting, combinatorial chemistry, and high-throughput screening. It is not merely convergence but equally ‘synthesis’ and ‘integration’ where there are prospects and possibilities in genomic medicine and biopharming, and also in agriculture, where plants become production platforms. These developments have sparked claims for the emergence of the bioeconomy and ‘its contribution to global food security, to climate, biodiversity and environmental protection and to a better quality of life,’ as the German Bioeconomy Council puts it. Most often, the problem with these claims is that they are never embedded in the existing political economy and, on the whole, do not sufficiently, if at all, consider questions of ownership and the way biodigital technologies may create monopolies and infringe the rights of indigenous groups and individuals. These ethico-political issues are central to biodigital philosophy. The critical sociology of biodigitalism needs to focus on the mergers, amalgamations, collaborations and start-ups that are taking place in the company reorganisations of information and synthetic biology, and the implications for the emerging biodigital science economy.